IBM has released their Power8 CPU and with that a bunch of servers and also some other news around this, like the OpenPower consortium that has been around for a year or so now. Besides IBM, Tyan and Google have announced that they are building systems around the Power8 CPU, with the a Tyan server being on sale for some $2800, but this is not a server with a high-end configuration, but it is in a 2U rack mount case and is extensible with more disks and RAM.

There is one thing with the Power8 CPU that sets it apart from the previous Power CPUs that is worth mentioning, which is the byte ordering or endianess of the CPU. The order of the bytes in value in a computer comes in two main flavours, the "Motorola style" AKA "big endian" which is how we write numbers in general, with the most significant digit (or byte in the case of computers) first, and then we have the "Intel style" or "Little endian" where the most significant byte comes last. All of this has little meaning to most of you so far. Thar Big endian is old school mainframe style, but is also used by many RISC CPUs. For those of you with a flair for weird facts one oddball of endianess was PDP-11, where each byte in a 16-bit part in a 32-bit word was swapped (this is called PDP-endian). And by the way, Oddball of Endianess would be a great name for a Country-Rock band (having at least 3 Telecaster equipped guitarists on stage).

What makes Power8 a bit different is that it supports operating systems using either big endian or little endian. Big endian is used by AIX and some Linuxes, but IBM is trying to get more Linuxes onto the little endian train. Among the Big endian Linuxes on Power8 are Red Hat and derivatives, like Fedora (note that CentOS isn't one of them, so far, as Centos 7 doesn't yet support Power at all, nor is 32-bit Intel supported anymore) as well as Debian and SUSE 11 (which will continue to be supported). The little endian Linuxes on Power8 so far includes SUSE 12 and Ubuntu 14.04. But IBM really wants Linux on Power to run Little endian, so it seems that Debian is on it's way there and Red Hat 7.1 will also support Little endian. But to be honest, we don't have the full story yet.

There are some really good things with the Power8 architecture, like really good performance (see this one for example by MariaDB and IBM) and also it is a great platform for Virtualization / Cloud infrastructure. And all that fuzz with endianess will hopefully be history soon when we have all Linuxes on Power8, as well as x86_64 of course, running Little endian (thank you). And the Country-Rock band mentioned above could call their first record "Fuzz with Endianess".

So, I have convinced you? If so, I guess you want to know what it costs. Well, Power8 machines from IBM starts at around $10.000, which is a lot it seems, but then you have to remember that used the way this type of system is probably mostly used, as a host for numerous virtual machines, then it is actually pretty reasonable. If you really want a less expensive machine, Tyan has one available which should sell for some $3.000 which is looking more reasonable. Eventually I guess Tyan will sell their motherboards, and the components in this, beside the CPU, is pretty standard stuff (DDR RAM, PCI buses, SATA disks etc). But the CPU is pretty expensive. Anyway, there is a way around all this, if you really want to try running Power8, and that is to use emulation, and once you get the hang of it, this works well, although slowly, but no so slow that you cannot test things on it (but too slow for production use I guess).

I'll show you how to set up an emulated Power8 system in a later blog post, so stay tuned and Don't touch that dial!

/Karlsson

I am Anders Karlsson, and I have been working in the RDBMS industry for many, possibly too many, years. In this blog, I write about my thoughts on RDBMS technology, happenings and industry, and also on any wild ideas around that I might think up after a few beers.

Tuesday, December 16, 2014

Thursday, October 2, 2014

OraMySQL 1.0 Alpha released - Replication from Oracle to MariaDB and MySQL!!

Now it's time to release something useful! At least I hope so. I have been going through how I came up with this idea and how I came up with the implementation in a series of blog posts:

But now it's time for the real deal, the software itself. This is an Alpha 1.0 release but it should work OK in the more basic setups. It's available for download from sourceforge, and here you find the source package which uses GNU autotools to build. The manual is part of this download, but is also available as a separately.

You do need MariaDB libraries and includefiles to build it, but no Oracle specific libraries are needed, only Oracle itself of course. There are build instructions in the documentation.

Cheers and happy replication folks

/Karlsson

But now it's time for the real deal, the software itself. This is an Alpha 1.0 release but it should work OK in the more basic setups. It's available for download from sourceforge, and here you find the source package which uses GNU autotools to build. The manual is part of this download, but is also available as a separately.

You do need MariaDB libraries and includefiles to build it, but no Oracle specific libraries are needed, only Oracle itself of course. There are build instructions in the documentation.

Cheers and happy replication folks

/Karlsson

Wednesday, October 1, 2014

Replication from Oracle to MariaDB the simple way - Part 4

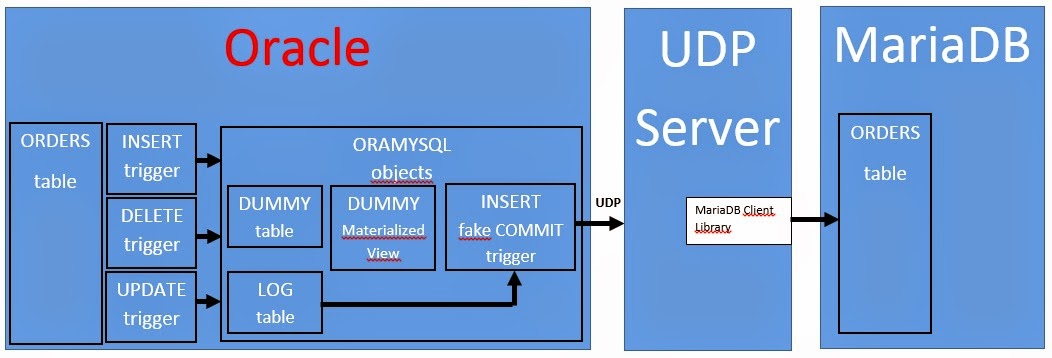

Now it's time to get serious about replicating to MariaDB from Oracle, and we are real close now, right? What I needed was a means of keeping track of what happens in a transaction, such as a LOG table of some kind, and then an idea of applying this log to MariaDB when there is a COMMIT in Oracle. And thing is, these two don't have to be related. So I can have a table which I write to and also have a Materialized View that is refreshed on COMMIT on, and I need a log table or something. And when the Materialized View is refreshed, as there is a COMMIT, then the log can be applied. From a schematic point-of-view, it looks something like this:

This looks more complex than it is, actually, all that is needed is some smart PL/SQL and this will work. I have not done much of any kind of testing, except checking that the basics work, but the PL/SQL needed I have done for you, and the order table triggers and what have you not is also created for you by a shell script that can do this for any table.

As for the DUMMY table that I have to use to get a trigger on COMMIT, this doesn't have to have that many rows, I actually just INSERT into it once per transaction, and then I INSERT the transaction id, which I get from Oracle. This table will have some junk in it after a while, all the transactions that were started and COMMITted will have an entry here. But in my code for this, I have included a simple job that purges this table from inactive transactions.

Best of all is that this works even with Oracle Express, so no need to pay for "Advanced Replication", not that I consider it really advanced or anything. I'd really like to know what you think about these ideas? Would it work? I know it's not perfect, far from it, for for the intent of having a MariaDB table reasonable well syncronized with an Oracle, this should work. Or? The solution is on one hand simple and lightweight, but I have given up on the number of features and possibly also the design affects performance a bit.But it should be good enough for many uses I think?

Let me hear what you think, I'm just about to release this puppy!

/Karlsson

This looks more complex than it is, actually, all that is needed is some smart PL/SQL and this will work. I have not done much of any kind of testing, except checking that the basics work, but the PL/SQL needed I have done for you, and the order table triggers and what have you not is also created for you by a shell script that can do this for any table.

As for the DUMMY table that I have to use to get a trigger on COMMIT, this doesn't have to have that many rows, I actually just INSERT into it once per transaction, and then I INSERT the transaction id, which I get from Oracle. This table will have some junk in it after a while, all the transactions that were started and COMMITted will have an entry here. But in my code for this, I have included a simple job that purges this table from inactive transactions.

Best of all is that this works even with Oracle Express, so no need to pay for "Advanced Replication", not that I consider it really advanced or anything. I'd really like to know what you think about these ideas? Would it work? I know it's not perfect, far from it, for for the intent of having a MariaDB table reasonable well syncronized with an Oracle, this should work. Or? The solution is on one hand simple and lightweight, but I have given up on the number of features and possibly also the design affects performance a bit.But it should be good enough for many uses I think?

Let me hear what you think, I'm just about to release this puppy!

/Karlsson

Monday, September 29, 2014

Replication from Oracle to MariaDB the simple way - Part 3

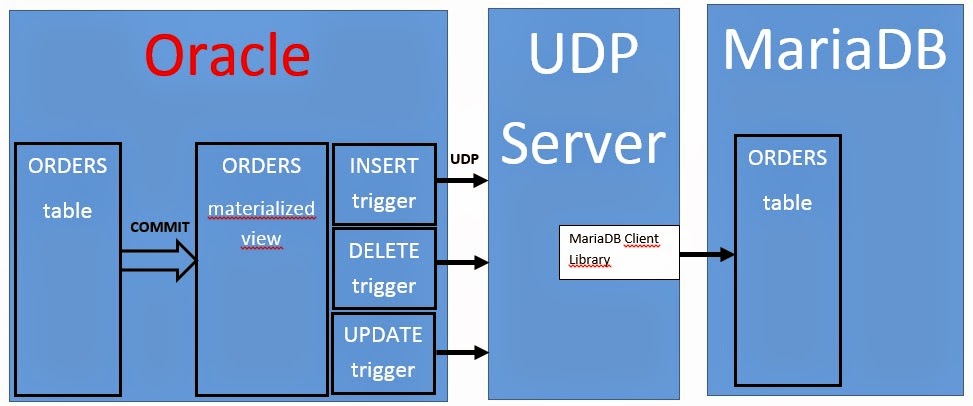

In this third installment in this series, I'll explain why the smart solution I described in the previous post actually wasn't that good, and then I go on to explain how to fix it, and why that fix wasn't such a smart thing after all. So, this was the design we ended with last time:

We have Oracle replicating to a Materialized View, this to ensure that we can run triggers when the is a commit, and then triggers on this Materialized View updates MariaDB by sending a UDP message to a server that in turn is connected to MariaDB.

The issue with the above thingy was that a Materialized View by default is refreshed in it's entirety when there is a refresh, so if the table has 10.000 rows and 1 is inserted, then there will be 20.001 messages sent to MariaDB (10.000 rows deleted, 10.001 inserted). Not fun. And it seems that Materialized Views in Oracle aren't so smart, but I was sure they were this dumbed down, if they were, noone would be using them. So I rush for the Oracle documentation, yiihaa!

The default way of updating a Materialized View is not that fast, but there is a supposedly fast, alternative, method, appropriately named FAST (that the default method isn't called something like AWFULLY CRAZY SLOW is beyond me). So the materialized view using FAST REFRESH for the orders table should really be created like this:

CREATE MATERIALIZED VIEW orders_mv

REFRESH FAST ON COMMIT

AS SELECT *

FROM orders;

But this gives an error from Oracle:

ORA-23413: table "SYSTEM"."ORDERS" does not have a materialized view log

Well the, let's create a MATERIALIZED VIEW LOG for table ORDERS then, that's no big deal:

CREATE MATERIALIZED VIEW LOG ON t1_mv;

But again I get an error, and this time indicating that old Larry has run out of gas in his MIG-21 and need my money to fill it up again, so he can fly off to his yacht:

ORA-00439: feature not enabled: Advanced replication

Grrnn (this is a sound I make when I get a bit upset)! Yes, if you want Materialized Views to work properly, they way the were designed, you need to part with some $$$, and as the cheap person I am, I run Oracle Express instead of SE or EE editions, as I rather spend my hard earned money on expensive beer than on Larrys stupid MIG-21. So, as they say, "Close, but no cigar".

But I'm not one to give up easily, as you probably know. And fact is, I don't need the whole Materialized View thing, all I want is a TRIGGER to execute on COMMIT. Hmm this requires a huge box of prescription drugs to fix, and I am already on the case. Sorry Larry, but you'll have to park your MIG-21 and have someone else buy you some gas.

More details on how I tricked Larry in the next part of this series on replication from Oracle to MariaDB.

/Karlsson

We have Oracle replicating to a Materialized View, this to ensure that we can run triggers when the is a commit, and then triggers on this Materialized View updates MariaDB by sending a UDP message to a server that in turn is connected to MariaDB.

The issue with the above thingy was that a Materialized View by default is refreshed in it's entirety when there is a refresh, so if the table has 10.000 rows and 1 is inserted, then there will be 20.001 messages sent to MariaDB (10.000 rows deleted, 10.001 inserted). Not fun. And it seems that Materialized Views in Oracle aren't so smart, but I was sure they were this dumbed down, if they were, noone would be using them. So I rush for the Oracle documentation, yiihaa!

The default way of updating a Materialized View is not that fast, but there is a supposedly fast, alternative, method, appropriately named FAST (that the default method isn't called something like AWFULLY CRAZY SLOW is beyond me). So the materialized view using FAST REFRESH for the orders table should really be created like this:

CREATE MATERIALIZED VIEW orders_mv

REFRESH FAST ON COMMIT

AS SELECT *

FROM orders;

But this gives an error from Oracle:

ORA-23413: table "SYSTEM"."ORDERS" does not have a materialized view log

Well the, let's create a MATERIALIZED VIEW LOG for table ORDERS then, that's no big deal:

CREATE MATERIALIZED VIEW LOG ON t1_mv;

But again I get an error, and this time indicating that old Larry has run out of gas in his MIG-21 and need my money to fill it up again, so he can fly off to his yacht:

ORA-00439: feature not enabled: Advanced replication

Grrnn (this is a sound I make when I get a bit upset)! Yes, if you want Materialized Views to work properly, they way the were designed, you need to part with some $$$, and as the cheap person I am, I run Oracle Express instead of SE or EE editions, as I rather spend my hard earned money on expensive beer than on Larrys stupid MIG-21. So, as they say, "Close, but no cigar".

But I'm not one to give up easily, as you probably know. And fact is, I don't need the whole Materialized View thing, all I want is a TRIGGER to execute on COMMIT. Hmm this requires a huge box of prescription drugs to fix, and I am already on the case. Sorry Larry, but you'll have to park your MIG-21 and have someone else buy you some gas.

More details on how I tricked Larry in the next part of this series on replication from Oracle to MariaDB.

/Karlsson

Replication from Oracle to MariaDB the simple way - Part 2

The theme for this series of posts is, and indicated in the previous post, "Try and try, again", and there will be more of this now when I start to make this work by playing with Oracle, with PL/SQL and with the restrictions of Oracle Express (which is the version I have available).

So, what we have right now is a way of "sending" SQL statements from Oracle to MariaDB, the question is when and how to send them from Oracle. The idea for this was then to use triggers on the Oracle tables to send the data to MariaDB, like this, assuming we are trying to replicate the orders table from Oracle to MariaDB:

In Oracle, and assuming that the extproc I have that created to send UDP messages is called oraudp, then I would do something like this:

CREATE OR REPLACE TRIGGER orders_insert

AFTER INSERT ON orders

FOR EACH ROW

BEGIN

CALL oraudp('INSERT INTO orders VALUES '||

:new.order_id||', '||:new.customer_id||', '||

:new.amount||')');

END;

/

CREATE OR REPLACE TRIGGER orders_update

AFTER UPDATE ON orders

FOR EACH ROW

BEGIN

CALL oraudp('UPDATE orders SET order_id = '||:new.order_id||

', customer_id = '||:new.customer_id||', amount = '||:new.amount||

' WHERE order_id = '||:old.order_id);

END;

/

CREATE OR REPLACE TRIGGER orders_delete

AFTER DELETE ON orders

FOR EACH ROW

BEGIN

CALL oraudp('DELETE FROM orders WHERE order_id = '||:old.order_id);

END;

/

As I noted in my previous post though, DML TRIGGERs execute when the DML statement they are defined for executes, not when that DML is committed, which means that if I replicate to MariaDB in a trigger and then roll back, the data will still be replicated to MariaDB. So the above design wasn't such a good one after all. But the theme is, as I said, try and try again, so off to think and some googling.

Google helped me find a workaround, which is by using Oracle MATERIALIZED VIEWs. And I know what you think, you think "What has dear old Karlsson been smoking this time, I bet it's not legal", how is all this related to MATERIALIZED VIEWs? Not at all, actually, but this is a bit of a kludge, it does actually work, and this is the nature of a kludge (I've done my fair share of these over the years). There are two attributes of Oracle MATERIALIZED VIEWs that makes these work for us:

In practice, if we continue to use theorders table, this is what I would do in Oracle:

CREATE MATERIALIZED VIEW orders_mvREFRESH ON COMMIT

AS SELECT order_id, customer_id, amount

FROM orders;

CREATE OR REPLACE TRIGGER orders_mv_insert

AFTER INSERT ON orders_mv

FOR EACH ROW

BEGIN

CALL oraudp('INSERT INTO orders VALUES '||

:new.order_id||', '||:new.customer_id||', '||

:new.amount||')');

END;

/

CREATE OR REPLACE TRIGGER orders_mv_update

AFTER UPDATE ON orders_mv

FOR EACH ROW

BEGIN

CALL oraudp('UPDATE orders SET order_id = '||:new.order_id||

', customer_id = '||:new.customer_id||', amount = '||:new.amount||

' WHERE order_id = '||:old.order_id);

END;

/

CREATE OR REPLACE TRIGGER orders_mv_delete

AFTER DELETE ON orders_mv

FOR EACH ROW

BEGIN

CALL oraudp('DELETE FROM orders WHERE order_id = '||:old.order_id);

END;

/

Now we have a solution that actually works, and it also works with Oracle transactions, nothing will be sent to MariaDB if a transaction is rolled back. There is a slight issue with this though, you might think it's no big deal, but fact is that performance sucks when we start adding data to the orders table, and the reason for this is that the default way for Oracle to refresh a materialized view is to delete all rows and then add the new ones. In short, Oracle doesn't keep track of the changes to the base table, except writing them to the base table just as usual, so when the MATERIALIZED VIEW is to be refreshed, all rows in the materialized view will first be deleted, and then all the rows in the base table inserted. So although this works, it is painfully slow.

So it's back to the drawing board, but we are getting closer, now it works at least, we just have a small performance problem to fix. I'll show you how I did that in the next post in this series, so see you all soon. And don't you dare skip a class...

/Karlsson

So, what we have right now is a way of "sending" SQL statements from Oracle to MariaDB, the question is when and how to send them from Oracle. The idea for this was then to use triggers on the Oracle tables to send the data to MariaDB, like this, assuming we are trying to replicate the orders table from Oracle to MariaDB:

In Oracle, and assuming that the extproc I have that created to send UDP messages is called oraudp, then I would do something like this:

CREATE OR REPLACE TRIGGER orders_insert

AFTER INSERT ON orders

FOR EACH ROW

BEGIN

CALL oraudp('INSERT INTO orders VALUES '||

:new.order_id||', '||:new.customer_id||', '||

:new.amount||')');

END;

/

CREATE OR REPLACE TRIGGER orders_update

AFTER UPDATE ON orders

FOR EACH ROW

BEGIN

CALL oraudp('UPDATE orders SET order_id = '||:new.order_id||

', customer_id = '||:new.customer_id||', amount = '||:new.amount||

' WHERE order_id = '||:old.order_id);

END;

/

CREATE OR REPLACE TRIGGER orders_delete

AFTER DELETE ON orders

FOR EACH ROW

BEGIN

CALL oraudp('DELETE FROM orders WHERE order_id = '||:old.order_id);

END;

/

As I noted in my previous post though, DML TRIGGERs execute when the DML statement they are defined for executes, not when that DML is committed, which means that if I replicate to MariaDB in a trigger and then roll back, the data will still be replicated to MariaDB. So the above design wasn't such a good one after all. But the theme is, as I said, try and try again, so off to think and some googling.

Google helped me find a workaround, which is by using Oracle MATERIALIZED VIEWs. And I know what you think, you think "What has dear old Karlsson been smoking this time, I bet it's not legal", how is all this related to MATERIALIZED VIEWs? Not at all, actually, but this is a bit of a kludge, it does actually work, and this is the nature of a kludge (I've done my fair share of these over the years). There are two attributes of Oracle MATERIALIZED VIEWs that makes these work for us:

- A MATERIALIZED VIEW can be updated ON COMMIT, which is one of the two ways a MATERIALIZED VIEW is updated (the other is ON DEMAND). So when there is a COMMIT that affects the tables underlying the MATERIALIZED VIEW, we have something happening.

- And the above would be useless if you couldn't attach a TRIGGER to a MATERIALIZED VIEW, but you can!

In practice, if we continue to use theorders table, this is what I would do in Oracle:

CREATE MATERIALIZED VIEW orders_mvREFRESH ON COMMIT

AS SELECT order_id, customer_id, amount

FROM orders;

CREATE OR REPLACE TRIGGER orders_mv_insert

AFTER INSERT ON orders_mv

FOR EACH ROW

BEGIN

CALL oraudp('INSERT INTO orders VALUES '||

:new.order_id||', '||:new.customer_id||', '||

:new.amount||')');

END;

/

CREATE OR REPLACE TRIGGER orders_mv_update

AFTER UPDATE ON orders_mv

FOR EACH ROW

BEGIN

CALL oraudp('UPDATE orders SET order_id = '||:new.order_id||

', customer_id = '||:new.customer_id||', amount = '||:new.amount||

' WHERE order_id = '||:old.order_id);

END;

/

CREATE OR REPLACE TRIGGER orders_mv_delete

AFTER DELETE ON orders_mv

FOR EACH ROW

BEGIN

CALL oraudp('DELETE FROM orders WHERE order_id = '||:old.order_id);

END;

/

Now we have a solution that actually works, and it also works with Oracle transactions, nothing will be sent to MariaDB if a transaction is rolled back. There is a slight issue with this though, you might think it's no big deal, but fact is that performance sucks when we start adding data to the orders table, and the reason for this is that the default way for Oracle to refresh a materialized view is to delete all rows and then add the new ones. In short, Oracle doesn't keep track of the changes to the base table, except writing them to the base table just as usual, so when the MATERIALIZED VIEW is to be refreshed, all rows in the materialized view will first be deleted, and then all the rows in the base table inserted. So although this works, it is painfully slow.

So it's back to the drawing board, but we are getting closer, now it works at least, we just have a small performance problem to fix. I'll show you how I did that in the next post in this series, so see you all soon. And don't you dare skip a class...

/Karlsson

Saturday, September 27, 2014

Replication from Oracle to MariaDB the simple way - Part 1

Yes, there is a simple way to do this. Although it might not be so simple unless you know how to do it, so let me show you how this can be done. It's actually pretty cool. But I'll do this over a number of blog posts, and this is just an introductory blog, covering some of the core concepts and components.

But getting this to work wasn't easy, I had to try several things before I got it right, and it's not really obvious how you make it work at first, so this is a story along the lines of "If at first you don't succeed mr Kidd" "Try and try again, mr Wint" from my favorite villains in the Bond movie "Diamonds are forever":

So, I had an idea of how to achieve replication from Oracle to MySQL and I had an idea on how to implement it, and it was rather simple, so why not try it.

So, part 1 then. Oracle has the ability to let you add a UDF (User Defined Procedure) just like MariaDB (and MySQL), but Oracle calls then extproc. So my first idea was an extproc that would send a message over UDP, any generic message. In this specific case though, I was to have a server that received that UDP message and then sent it to MariaDB as a query. So this server was connected to MariaDB and a message came in though UDP, and this message would of course be some DML statement. Simple and efficient, right? Of course UDP is not a reliable protocol, but for this usage it should be OK, right?

All that was needed after this was a set of triggers on the Oracle tables that would send a message to the server which would then send these to MySQL, and this message would be based on the changed data, as provided by the trigger, like a corresponding INSERT, UPDATE or DELETE statement. This should work. OK, let's get to work.

My first attempt at the server meant that a lot of UDP messages were lost. The reason for this was that there were just to many messages coming in bursts (this is how extprocs works in Oracle, nothing wrong with Oracle here, it's just the nature of the beast). The solution was to have the server be multithreaded, with one tight thread receiving messages on the UDP port, and then having a queue and another thread processing the queue and send the messages to MariaDB?

Well, this didn't work out either. The reason was that the means I did synchronization between the threads was using Mutexes, and this also slowed things down. It worked OK for a while, but some burst could easily mean that UDP messages were lost, and really not much was necessary in terms of load for this to happen.

So, what do we do now? I had two choices, either I skip UDP and go for a connected TCP solution instead. The issue with this was that partly the extra overhead this would cause on the Oracle end of things, and partly that this would increase the complexity of my extproc and server, and above all the API to all this. The other option was to go for a lock-less queue. Some googling and some testing allowed me to figure out how to do this with gcc and I could move on and test this. Fact is, it worked fine. And as UDP messages can't be routed, using UDP messages in and of themselves made things a bit more secure.

But then there was the issue with the TRIGGERs calling the extproc. A trigger is called on a DML operation, but that operation might later be rolled back, and in that case I would break the consistency of data. And for some reason, for all the enhancement to standard SQL that Oracle provides, (including DDL triggers), there are no ON COMMIT triggers. But there just had to be a way around that one, right?

So Try and try again, mr Wint. Yes, I will solve all this, but the story is to be continued in another blog post. Also, I will eventually provide with the code for all this. GPL code that you can use out of the box to replicate from Oracle to MariaDB. But not just yet.

So don't touch that dial!

/Karlsson

But getting this to work wasn't easy, I had to try several things before I got it right, and it's not really obvious how you make it work at first, so this is a story along the lines of "If at first you don't succeed mr Kidd" "Try and try again, mr Wint" from my favorite villains in the Bond movie "Diamonds are forever":

So, part 1 then. Oracle has the ability to let you add a UDF (User Defined Procedure) just like MariaDB (and MySQL), but Oracle calls then extproc. So my first idea was an extproc that would send a message over UDP, any generic message. In this specific case though, I was to have a server that received that UDP message and then sent it to MariaDB as a query. So this server was connected to MariaDB and a message came in though UDP, and this message would of course be some DML statement. Simple and efficient, right? Of course UDP is not a reliable protocol, but for this usage it should be OK, right?

All that was needed after this was a set of triggers on the Oracle tables that would send a message to the server which would then send these to MySQL, and this message would be based on the changed data, as provided by the trigger, like a corresponding INSERT, UPDATE or DELETE statement. This should work. OK, let's get to work.

My first attempt at the server meant that a lot of UDP messages were lost. The reason for this was that there were just to many messages coming in bursts (this is how extprocs works in Oracle, nothing wrong with Oracle here, it's just the nature of the beast). The solution was to have the server be multithreaded, with one tight thread receiving messages on the UDP port, and then having a queue and another thread processing the queue and send the messages to MariaDB?

Well, this didn't work out either. The reason was that the means I did synchronization between the threads was using Mutexes, and this also slowed things down. It worked OK for a while, but some burst could easily mean that UDP messages were lost, and really not much was necessary in terms of load for this to happen.

So, what do we do now? I had two choices, either I skip UDP and go for a connected TCP solution instead. The issue with this was that partly the extra overhead this would cause on the Oracle end of things, and partly that this would increase the complexity of my extproc and server, and above all the API to all this. The other option was to go for a lock-less queue. Some googling and some testing allowed me to figure out how to do this with gcc and I could move on and test this. Fact is, it worked fine. And as UDP messages can't be routed, using UDP messages in and of themselves made things a bit more secure.

But then there was the issue with the TRIGGERs calling the extproc. A trigger is called on a DML operation, but that operation might later be rolled back, and in that case I would break the consistency of data. And for some reason, for all the enhancement to standard SQL that Oracle provides, (including DDL triggers), there are no ON COMMIT triggers. But there just had to be a way around that one, right?

So Try and try again, mr Wint. Yes, I will solve all this, but the story is to be continued in another blog post. Also, I will eventually provide with the code for all this. GPL code that you can use out of the box to replicate from Oracle to MariaDB. But not just yet.

So don't touch that dial!

/Karlsson

Thursday, August 14, 2014

Script to manage MaxScale

MaxScale 1.0 from SkySQL is now in Beta and there are some cool features in it, I guess some adventurous people has already put it into production. There are still some rough edges and stuff to be fixed, but it is clearly close to GA. One thing missing though are something to manage starting and stopping MaxScale in a somewhat controlled way, which is what this blog is all about.

I have developed two simple scripts that should help you manage MaxScale in a reasonable way, but before we go into the actual scripts, there are a few things I need to tell you. To begin with, if you haven't yet downloaded MaxScale 1.0 beta, you can get it from MariaDB.com, just go to Resources->MaxScale and to get to the downloads you first need to register (which is free). Here are downloads to rpms and source, but if you are currently looking for a tarball, there seems to be none, well actually there is, the first link under "Source Tarball" actually is a binary tarball. I have reported this so by the time you read this, this might have been fixed. Of course you can always get the source from github and build it yourself.

Anyway, for MaxScale to start, you need a configuration file and you have to set the MaxScale home directory. If you are on CentOS or RedHat and install the rpms (which is how I set it us), MaxScale is installed in /usr/local/skysql/maxscale, and this is also what MAXSCALE_HOME needs to be set to. MaxScale can take a configuration file argument, but if this isn't passed, then MAXSCALE_HOME/etc/MaxScale.cnf will be used. In addition, you will probably have to add MAXSCALE_HOME/lib to your LD_LIBRARY_PATH variable.

All in all, there some environment variables to set before we can start MaxScale, and this is the job of the first script, maxenv.sh. For this to work, it has to be placed in the bin directory under MAXSCALE_HOME. In this script we also set MAXSCALE_USER, and this is used by MaxScale start / stop script to be explained later, and this is the linux user that will run MaxScale. You can set this to an empty string to run maxscale as the current user, which is the normal way that MaxScale runs, but in that case you need to make sure that the user in question has write access to MAXSCALE_HOME and subdirectories.

So, here we go, here is maxenv.sh, and you can copy this into the bin directory under your MAXSCALE_HOME directory and use it like that (note that I set the user to run MaxScale to mysql, so if you don't have that user, then create that or modify maxenv.sh accordingly):

#!/bin/bash

#

export MAXSCALE_HOME=$(cd `dirname ${BASH_SOURCE[0]}`/..; pwd)

export MAXSCALE_USER=mysql

PATH=$PATH:$MAXSCALE_HOME/bin

# Add MaxScale lib directory to LD_LIBRARY_PATH, unless it is already there.

if [ `echo $LD_LIBRARY_PATH | awk -v RS=: '{print $0}' | grep -c "^$MAXSCALE_HOME/lib$"` -eq 0 ]; then

export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:$MAXSCALE_HOME/lib

fi

export MAXSCALE_PIDFILE=$MAXSCALE_HOME/log/maxscale.pid

Now it's time for maxctl, which is the script that starts and stops MaxScale, and this also must be placed in the MAXSCALE_HOME/bin directory, and note that this script relies on maxenv.sh above, so to use maxctl you also need maxenv.sh as above. The script is rather long, but there are probably a thing or two missing anyway, but for me this has been useful:

#!/bin/bash

#

# Script to start and stop MaxScale.

#

# Set up the environment

. $(cd `dirname $0`; pwd -P)/maxenv.sh

# Set default variables

NOWAIT=0

HELP=0

QUIET=0

MAXSCALE_PID=0

# Get pid of MaxScale if it is running.

# Check that the pidfile exists.

if [ -e $MAXSCALE_PIDFILE ]; then

MAXSCALE_PID=`cat $MAXSCALE_PIDFILE`

# Check if the process is running.

if [ `ps --no-heading -p $MAXSCALE_PID | wc -l` -eq 0 ]; then

MAXSCALE_PID=0

fi

fi

# Function to print output

printmax() {

if [ $QUIET -eq 0 ]; then

echo $* >&2

fi

}

# Function to print help

helpmax() {

echo "Usage: $0 start|stop|status|restart"

echo "Options:"

echo "-f - MaxScale config file"

echo "-h - Show this help"

echo "-n - Don't wait for operation to finish before exiting"

echo "-q - Quiet operation"

}

# Function to start maxscale

startmax() {

# Check if MaxScale is already running.

if [ $MAXSCALE_PID -ne 0 ]; then

printmax "MaxScale is already running"

exit 1

fi

# Check that we are running as root if a user to run as is specified.

if [ "x$MAXSCALE_USER" != "x" -a `id -u` -ne 0 ]; then

printmax "$0 must be run as root"

exit 1

fi

# Check that we can find maxscale

if [ ! -e $MAXSCALE_HOME/bin/maxscale ]; then

printmax "Cannot find MaxScale executable ($MAXSCALE_HOME/bin/maxscale)"

exit 1

fi

# Check that the config file exists, if specified.

if [ "x$MAXSCALE_CNF" != "x" -a ! -e "$MAXSCALE_CNF" ]; then

printmax "MaxScale configuration file ($MAXSCALE_CNF) not found"

exit 1

fi

# Start MaxScale

if [ "x$MAXSCALE_USER" == "x" ]; then

$MAXSCALE_HOME/bin/maxscale -c $MAXSCALE_HOME ${MAXSCALE_CNF:+-f $MAXSCALE_CNF}

else

su $MAXSCALE_USER -m -c "$MAXSCALE_HOME/bin/maxscale -c $MAXSCALE_HOME ${MAXSCALE_CNF:+-f $MAXSCALE_CNF}"

fi

}

# Function to stop maxscale

stopmax() {

NOWAIT=1

if [ "x$1" == "-n" ]; then

NOWAIT=0

fi

# Check that we are running as root if a user to run as is specified.

if [ "x$MAXSCALE_USER" != "x" -a `id -u` -ne 0 ]; then

printmax "$0 must be run as root"

exit 1

fi

# Check that the pidfile exists.

if [ ! -e $MAXSCALE_PIDFILE ]; then

printmax "Can't find MaxScale pidfile ($MAXSCALE_PIDFILE)"

exit 1

fi

MAXSCALE_PID=`cat $MAXSCALE_PIDFILE`

# Kill MaxScale

kill $MAXSCALE_PID

if [ $NOWAIT -ne 0 ]; then

# Wait for maxscale to die.

while [ `ps --no-heading -p $MAXSCALE_PID | wc -l` -ne 0 ]; do

usleep 100000

done

MAXSCALE_PID=0

fi

}

# Function to show the status of MaxScale

statusmax() {

# Check that the pidfile exists.

if [ $MAXSCALE_PID -ne 0 ]; then

printmax "MaxScale is running (pid: $MAXSCALE_PID user: `ps -p $MAXSCALE_PID --no-heading -o euser`)"

exit 0

fi

printmax "MaxScale is not running"

exit 1

}

# Process options.

while getopts ":f:hnq" OPT; do

case $OPT in

f)

MAXSCALE_CNF=$OPTARG

;;

h)

helpmax

exit 0

;;

n)

NOWAIT=1

;;

q)

QUIET=1

;;

\?)

echo "Invalid option: -$OPTARG"

;;

esac

done

# Process arguments following options.

shift $((OPTIND - 1))

OPER=$1

# Check that an operation was passed

if [ "x$1" == "x" ]; then

echo "$0: your must enter an operation: start|stop|restart|status" >&2

exit 1

fi

# Handle the operations.

case $OPER in

start)

startmax

;;

stop)

stopmax

;;

status)

statusmax

;;

restart)

if [ $MAXSCALE_PID -ne 0 ]; then

NOWAITSAVE=$NOWAIT

NOWAIT=0

stopmax

NOWAIT=$NOWAITSAVE

fi

startmax

;;

*)

echo "Unknown operation: $OPER. Use start|stop|restart|status"

exit 1

esac

To use this script, you call it with one of the 4 main operations: start, stop, restart or status. If you are to run as a specific user, you have top run it as root (or using sudo):

sudo /usr/local/skysql/maxscale/maxctl start

Also, there are some options you can pass:

I have developed two simple scripts that should help you manage MaxScale in a reasonable way, but before we go into the actual scripts, there are a few things I need to tell you. To begin with, if you haven't yet downloaded MaxScale 1.0 beta, you can get it from MariaDB.com, just go to Resources->MaxScale and to get to the downloads you first need to register (which is free). Here are downloads to rpms and source, but if you are currently looking for a tarball, there seems to be none, well actually there is, the first link under "Source Tarball" actually is a binary tarball. I have reported this so by the time you read this, this might have been fixed. Of course you can always get the source from github and build it yourself.

Anyway, for MaxScale to start, you need a configuration file and you have to set the MaxScale home directory. If you are on CentOS or RedHat and install the rpms (which is how I set it us), MaxScale is installed in /usr/local/skysql/maxscale, and this is also what MAXSCALE_HOME needs to be set to. MaxScale can take a configuration file argument, but if this isn't passed, then MAXSCALE_HOME/etc/MaxScale.cnf will be used. In addition, you will probably have to add MAXSCALE_HOME/lib to your LD_LIBRARY_PATH variable.

All in all, there some environment variables to set before we can start MaxScale, and this is the job of the first script, maxenv.sh. For this to work, it has to be placed in the bin directory under MAXSCALE_HOME. In this script we also set MAXSCALE_USER, and this is used by MaxScale start / stop script to be explained later, and this is the linux user that will run MaxScale. You can set this to an empty string to run maxscale as the current user, which is the normal way that MaxScale runs, but in that case you need to make sure that the user in question has write access to MAXSCALE_HOME and subdirectories.

So, here we go, here is maxenv.sh, and you can copy this into the bin directory under your MAXSCALE_HOME directory and use it like that (note that I set the user to run MaxScale to mysql, so if you don't have that user, then create that or modify maxenv.sh accordingly):

#!/bin/bash

#

export MAXSCALE_HOME=$(cd `dirname ${BASH_SOURCE[0]}`/..; pwd)

export MAXSCALE_USER=mysql

PATH=$PATH:$MAXSCALE_HOME/bin

# Add MaxScale lib directory to LD_LIBRARY_PATH, unless it is already there.

if [ `echo $LD_LIBRARY_PATH | awk -v RS=: '{print $0}' | grep -c "^$MAXSCALE_HOME/lib$"` -eq 0 ]; then

export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:$MAXSCALE_HOME/lib

fi

export MAXSCALE_PIDFILE=$MAXSCALE_HOME/log/maxscale.pid

Now it's time for maxctl, which is the script that starts and stops MaxScale, and this also must be placed in the MAXSCALE_HOME/bin directory, and note that this script relies on maxenv.sh above, so to use maxctl you also need maxenv.sh as above. The script is rather long, but there are probably a thing or two missing anyway, but for me this has been useful:

#!/bin/bash

#

# Script to start and stop MaxScale.

#

# Set up the environment

. $(cd `dirname $0`; pwd -P)/maxenv.sh

# Set default variables

NOWAIT=0

HELP=0

QUIET=0

MAXSCALE_PID=0

# Get pid of MaxScale if it is running.

# Check that the pidfile exists.

if [ -e $MAXSCALE_PIDFILE ]; then

MAXSCALE_PID=`cat $MAXSCALE_PIDFILE`

# Check if the process is running.

if [ `ps --no-heading -p $MAXSCALE_PID | wc -l` -eq 0 ]; then

MAXSCALE_PID=0

fi

fi

# Function to print output

printmax() {

if [ $QUIET -eq 0 ]; then

echo $* >&2

fi

}

# Function to print help

helpmax() {

echo "Usage: $0

echo "Options:"

echo "-f

echo "-h - Show this help"

echo "-n - Don't wait for operation to finish before exiting"

echo "-q - Quiet operation"

}

# Function to start maxscale

startmax() {

# Check if MaxScale is already running.

if [ $MAXSCALE_PID -ne 0 ]; then

printmax "MaxScale is already running"

exit 1

fi

# Check that we are running as root if a user to run as is specified.

if [ "x$MAXSCALE_USER" != "x" -a `id -u` -ne 0 ]; then

printmax "$0 must be run as root"

exit 1

fi

# Check that we can find maxscale

if [ ! -e $MAXSCALE_HOME/bin/maxscale ]; then

printmax "Cannot find MaxScale executable ($MAXSCALE_HOME/bin/maxscale)"

exit 1

fi

# Check that the config file exists, if specified.

if [ "x$MAXSCALE_CNF" != "x" -a ! -e "$MAXSCALE_CNF" ]; then

printmax "MaxScale configuration file ($MAXSCALE_CNF) not found"

exit 1

fi

# Start MaxScale

if [ "x$MAXSCALE_USER" == "x" ]; then

$MAXSCALE_HOME/bin/maxscale -c $MAXSCALE_HOME ${MAXSCALE_CNF:+-f $MAXSCALE_CNF}

else

su $MAXSCALE_USER -m -c "$MAXSCALE_HOME/bin/maxscale -c $MAXSCALE_HOME ${MAXSCALE_CNF:+-f $MAXSCALE_CNF}"

fi

}

# Function to stop maxscale

stopmax() {

NOWAIT=1

if [ "x$1" == "-n" ]; then

NOWAIT=0

fi

# Check that we are running as root if a user to run as is specified.

if [ "x$MAXSCALE_USER" != "x" -a `id -u` -ne 0 ]; then

printmax "$0 must be run as root"

exit 1

fi

# Check that the pidfile exists.

if [ ! -e $MAXSCALE_PIDFILE ]; then

printmax "Can't find MaxScale pidfile ($MAXSCALE_PIDFILE)"

exit 1

fi

MAXSCALE_PID=`cat $MAXSCALE_PIDFILE`

# Kill MaxScale

kill $MAXSCALE_PID

if [ $NOWAIT -ne 0 ]; then

# Wait for maxscale to die.

while [ `ps --no-heading -p $MAXSCALE_PID | wc -l` -ne 0 ]; do

usleep 100000

done

MAXSCALE_PID=0

fi

}

# Function to show the status of MaxScale

statusmax() {

# Check that the pidfile exists.

if [ $MAXSCALE_PID -ne 0 ]; then

printmax "MaxScale is running (pid: $MAXSCALE_PID user: `ps -p $MAXSCALE_PID --no-heading -o euser`)"

exit 0

fi

printmax "MaxScale is not running"

exit 1

}

# Process options.

while getopts ":f:hnq" OPT; do

case $OPT in

f)

MAXSCALE_CNF=$OPTARG

;;

h)

helpmax

exit 0

;;

n)

NOWAIT=1

;;

q)

QUIET=1

;;

\?)

echo "Invalid option: -$OPTARG"

;;

esac

done

# Process arguments following options.

shift $((OPTIND - 1))

OPER=$1

# Check that an operation was passed

if [ "x$1" == "x" ]; then

echo "$0: your must enter an operation: start|stop|restart|status" >&2

exit 1

fi

# Handle the operations.

case $OPER in

start)

startmax

;;

stop)

stopmax

;;

status)

statusmax

;;

restart)

if [ $MAXSCALE_PID -ne 0 ]; then

NOWAITSAVE=$NOWAIT

NOWAIT=0

stopmax

NOWAIT=$NOWAITSAVE

fi

startmax

;;

*)

echo "Unknown operation: $OPER. Use start|stop|restart|status"

exit 1

esac

To use this script, you call it with one of the 4 main operations: start, stop, restart or status. If you are to run as a specific user, you have top run it as root (or using sudo):

sudo /usr/local/skysql/maxscale/maxctl start

Also, there are some options you can pass:

- -h - Print help

- -f

- Run with the spcified configuration file instead of MAXSCALE_HOME/etc/MaxScale.cnf - -n - Don't want for stop to finish before returning. Stopping MaxScale by sending a SIGTERM is how it is to be done, but it takes a short time for MaxScale to stop completely. By default maxctl will wait until MaxScale is stopped before returning, by passing this option MaxScale will return immediately though.

- -q - Quiet operation

Monday, August 4, 2014

What is HandlerSocket? And why would you use it? Part 1

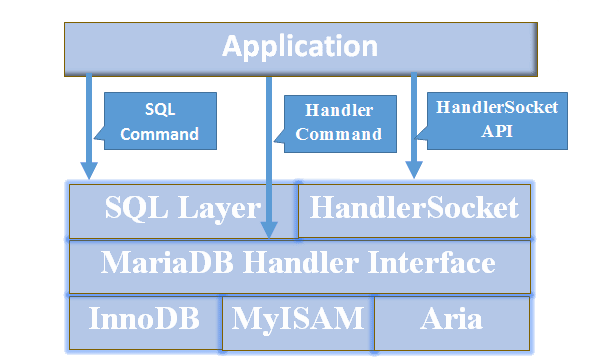

HandlerSocket is included with MariaDB and acts like a simple NoSQL interface to InnoDB, XtraDB and Spider and I will describe it a bit more in this and a few upcoming blogs.

So, what is HandlerSocket? Adam Donnison wrote a great blog on how to get started with it, but if you are developing MariaDB applications using C, C++, PHP or Java what good does HandlerSocket do you?

HandlerSocket in itself is a MariaDB plugin, of a type that is not that common as is is a daemon plugin. Adam shows in his blog how to enable it and install it, so I will not cover that here. Instead I will describe what it does, and doesn't do.

A daemon plugin is a process that runs "inside" the MariaDB. A daemon plugin can implement anything really, as long as it is relevant to MariaDB. One daemon plugin is for examples the Job Queue Daemon and another then is HandlerSocket. A MariaDB Plugin has access to the MariaDB internals, so there is a lot of things that can be implemented in a daemon plugin, even if it is a reasonable simple concept.

Inside MariaDB there is an internal "handler" API which is used as an interface to the MariaDB Storage Engines, although not all engines supports this interface. MariaDB then has a means to "bypass" the SQL layer and access the Handler interface directly, and this is done by using the HANDLER commands with MariaDB. Note that we are not talking about a different API to uise the handler commands, instead they are executed using the same SQL interface as you are used it's, it's just that you use a different set of commands. One limitation of these commands is that they only allow reads, even though the internal Handler interface supports writes as well as reads.

When you use the HANDLER commands, you have to know not only the tables name that you access, but also the index you will be using when you access that table (assuming then you want to use an index, but you probably do). A simple example of using the HANDLER commands follows here:

# Open the orders table, using the alias o

HANDLER orders OPEN AS o;

# Read an order record using the primary key.

HANDLER o READ `PRIMARY` = (156);

# Close the order table.

HANDLER o CLOSE;

In the above example, I guess this is just overcomplication something basically simple, as all this does is the same as

SELECT * FROM orders WHERE id = 156;

The advantage of using the handler interface though is performance, for large datasets using the Handler interface is much faster.

All this brings up three questions:

/Karlsson

So, what is HandlerSocket? Adam Donnison wrote a great blog on how to get started with it, but if you are developing MariaDB applications using C, C++, PHP or Java what good does HandlerSocket do you?

HandlerSocket in itself is a MariaDB plugin, of a type that is not that common as is is a daemon plugin. Adam shows in his blog how to enable it and install it, so I will not cover that here. Instead I will describe what it does, and doesn't do.

A daemon plugin is a process that runs "inside" the MariaDB. A daemon plugin can implement anything really, as long as it is relevant to MariaDB. One daemon plugin is for examples the Job Queue Daemon and another then is HandlerSocket. A MariaDB Plugin has access to the MariaDB internals, so there is a lot of things that can be implemented in a daemon plugin, even if it is a reasonable simple concept.

Inside MariaDB there is an internal "handler" API which is used as an interface to the MariaDB Storage Engines, although not all engines supports this interface. MariaDB then has a means to "bypass" the SQL layer and access the Handler interface directly, and this is done by using the HANDLER commands with MariaDB. Note that we are not talking about a different API to uise the handler commands, instead they are executed using the same SQL interface as you are used it's, it's just that you use a different set of commands. One limitation of these commands is that they only allow reads, even though the internal Handler interface supports writes as well as reads.

When you use the HANDLER commands, you have to know not only the tables name that you access, but also the index you will be using when you access that table (assuming then you want to use an index, but you probably do). A simple example of using the HANDLER commands follows here:

# Open the orders table, using the alias o

HANDLER orders OPEN AS o;

# Read an order record using the primary key.

HANDLER o READ `PRIMARY` = (156);

# Close the order table.

HANDLER o CLOSE;

In the above example, I guess this is just overcomplication something basically simple, as all this does is the same as

SELECT * FROM orders WHERE id = 156;

The advantage of using the handler interface though is performance, for large datasets using the Handler interface is much faster.

All this brings up three questions:

- First, if we are bypassing the SQL layer, by using the HANDLER Commands, would it not be faster to bypass the SQL level protocol altogether, and just use a much simple protocol?

- Secondly, while we are at it, why don't we allow writes, as this is the biggest issues, we can always speed reads by scaling out anyway?

- And last, how much faster is this, really?

/Karlsson

Friday, July 11, 2014

MariaDB Replication, MaxScale and the need for a binlog server

Introduction

This is an introduction to MariaDB Replication and to why we need a binlogs server and what this is. The first part is an introduction to replication basics, and if you know this already, then you want want to skip past the first section or two.MariaDB Replication

MySQL and MariaDB has a simple but very effective replication system built into it. The replication system is asynchronous and is based on a pull, instead of a push, system. What this means in short is that the Master keeps track of the DML operations and other things that might change the state of the master database and this is stored in what is called the binlog. The slave on the other hand is responsible for getting the relevant information from the master to keep up to speed. The binlogs consist of a number of files that the master generates, and the traditional way of dealing with slaves is to point them to the master, specifying a starting point in the binlogs consisting of a filename and a position.When a slave is started it gets the data from the binlogs, one record at the time, from the given position in the master binlogs and in the process updates the current binlogs file and position. So the master keep track of the transactions and the slave follows behind as fast as it can. The slave has two types of threads, the IO thread that gets data from the master and to a separate relay log on the slave, and an SQL thread that applies the data from the relay log to the slave database.

This really is less complicated than it sounds, in a way, but the implementation of it on the other hand is probably more complicated than one might think. There are also some issues with this setup, some which is fixed by the recent GTID implementation in MySQL 5.6 and more significantly in MariaDB 10.

In a simple setup with just 1 master and a few slaves, this is all there is to it: Take a backup from the master and take to keep track of the binlogs position when this is done, then recover this backup to a slave and then set the slave starting position at the position when the backup was executed. Now the slave will catch up with the operations that has happened since the backup was run and eventually it will catch up with the master and then poll for any new events.

One that that is not always the case with all database systems is that the master and the slave are only different in the sense of the configuration. Except this, these servers run the same software and the same operations can be applied to them, so running DML on a slave is no different than on a master, but if there is a collision between some data that was entered manually on the slave and some data the arrives through replication, say there is a duplicate primary key, then replication will stop.

What do we use replication for

Replication is typically used for one or more of three purposes:• Read Scale-out - In this case the slaves are used for serving data that is read, whereas all writes go to the master. As in most web applications there is a much larger amount of reads than writes, this makes for good scalability and we have fewer writes to be handled by the single master, whereas the many reads can be server by one or more slaves.

• Backup - Using a slave for backup is usually a pretty good idea. This allows for cold backups even, as if we shut down the slave for backing it up, it will catch up with the master once restarted after the backup is done. Having a full database setup on a slave for backup also means that recovery times of we need to do that, is fast and also allows for partial recovery if necessary.

• High Availability - As the master and Slave are kept in sync, at least with some delay, one can sure use the slave to fail over to should the master fail. The asynchronous nature of replication does mean though that failover is a bit more complex than one might think. There might be data in the relay slave log that has not yet been applied and might cause issues when the slave is treated as a master. Also, there might be data in the binlogs on the master which means that when the master is again brought on-line, it might be out of sync with the slave: some data that never was picked up by the slave might be on the master and data that entered the system after the slave was switched to a master is not on the old master.

The nature of replicated data

In the old days, the way replication worked was by just sending any statement that modified data on the master to the slave. This way of working is still available, but over time it was realized that this was a bit difficult, in particular with some storage engines.This led to the introduction of Row-based Replication (RBR) where the data to be replicated is transferred not as a SQL statement but as a binary representation of the data to be modified.

Replicating the SQL statement is called Statement Based Replication (SBR). An example of a statement that can cause issues when using SBR is:

DELETE FROM test.tab1 WHERE id > 10 LIMIT 5

In this case RBR will work whereas when using statement based replication we cannot determine which rows will be deleted. There are more examples of such non-deterministic SQL statements where SBR fails but RBR works.

A third replication format mode is available, MIXED, where MariaDB decided ona statement by statement base which replication format is best.

Scaling replication

Eventually many users ended up having many slaves attached to that single master. And for a while, this was not a big issue, the asynchronous nature of things means that the load on the master was limited when using replication, but with enough nodes, eventually this turned in to being an issue.The solution then was to introduce an "intermediate master". This is a slave that is also a master to other slaves, and this is configured having log_slave_updates on, which means that data that is applied on the slave from the relay log and into the slave, are also written to the binlog.

This is a pretty good idea, but there are some issues also. To begin with, on the intermediate master, data has to be written several times, once in the relay log, once in the database (and if InnoDB is used, a transaction log is also written) and then we have to write it to the binlog.

Another issue that is in effect here is the single threaded nature of replication (this is different in MariaDB 10 and MySQL 5.7 and up), which means that a slave on a master that runs many threads, might get into a situation where the slave can't keep up with the master, even though the slave is similarly configured as the master. Also, a run running statement on the master will hold up replication for as long as that statement runs on the slave, and if we have an intermediate master, then the delay will be doubled (once on the intermediate master and once on the actual slave).

The combined effect of the duplication of the delay and the requirement to write data so many times, leads to the result that an intermediate master maybe isn't such a good idea after all.

As for the replication use-cases, intermediate masters are sometimes used as alternative masters when failing over. This might seems like a good idea, but the issue is that the binlogs on the intermediate master doesn't look the same as the binlogs on the actual master. This is fixed by using Global Transaction IDs though, but these have different issues and unless you are running MariaDB 10 or MySQL 5.6, this isn't really an option (and even with MySQL 5.6, there are big issues with this).

What we need then is something else. Something that is a real intermediate master. Something that looks like a slave to the master and as a master to the slave, but doesn't have to write data three times first and that doesn't have to apply all the replication data itself so it doesn't introduce delays into the replication chain.

The slave that attaches to this server should see the same replication files as it would see it it connected to the real master.

MaxScale and the Plugin architecture

So let's introduce MaxScale then, and the plugin architecture. MaxScale has been described before, but one that that might not be fully clear is the role of the plugins. MaxScale relies much more on the plugins that most other architectures, fact is, without the plugins, MaxScale can't do anything, everything is a plugin!The MaxScale core is a multi-threaded epoll based kernel with 5 different types of plugins (note that there might be more than one plugin of each type, and this is mostly the case actually:

• Protocols - These implement communication protocols, including debugging and monitoring protocols. From this you realize that without appropriate protocol plugins, MaxScale will not be able to be accessed at all, so these modules are key. Among the current protocols are MariaDB / MySQL Client and Server protocols.

• Authentication - This type of plugin authenticates users connecting to MaxScale. Currently MariaDB / MySQL Authentication is supported.

• Router - This is a key type of module that determines how SQL traffic is routed an managed.

• Filter - This is an optional type of pluging there the SQL traffic can be modfied, checked or rejected,

• Monitor - This type of modules is there to monitor the servers that MaxScale connects to, and this data is used by the routing mode.

Before we end this discussion on MaxScale, note that there might be several configurations through one single MaxScale setup, so MaxScale can listen to one prot for one set up servers and routine setup, and on another port for a different setup.

With this we have an idea how MaxScale work, so let's see if we can tie it all up.

MaxScale as a Binlog server

As can be seen from the description of MaxScale a lot of what is needed to create a Binlog server to use as an intermediate server for slaves is there. What is needed is a router module that acts as a slave to the assigned master, downloads the binlogs from there, using the usual MariaDB / MySQL Replication protocol. This routing plugin also needs to serve the slaves with the downloaded binlogs files. In theory, and also in practice, the slaves will not know if it is connected to the real master or to MaxScale.Using MaxScale this way as an intermediate Master, a slave that connects to the MaxScale can work from the same Binlog files and positions as when connected directly to the master, as the files are the same for all intents and purposes. There will be no extra delays for long running SQL statements as these aren't applied on MaxScale, the replication data is just copied from the master, plain and simple. As for parallel slaves, this should work better in when using MaxScale as a Binlog server, but this is yet to be tested.

So there should be many advantages to using MaxScale as a binlog server compared to using an intermediate MariaDB / MySQL server. On the other hand, this solution is not for everyone, many just doesn't drive replication that hard that the load on the master is an issue so that an intermediate Master is requited. On the other, many use an Intermediate Master also for HA, and in this case it would have be advantageous to use MaxScale instead of that Intermediate master, the latter which could still server the role as a fail-over HA server.

Now, there one issue with all of this that many of you might have spotted: That cool Binlog server plugin module for MaxScale doesn't exists. Well, I am happy to say that you are wrong, it does exist and it works. A Pilot for such a module has been developed by SkySQL together with Booking.com that had just this need for an intermediate server that wasn't just yet another MariaDB / MySQL server. For the details on the specific usecase, see the blog by Jean-François Gagné.

Subscribe to:

Posts (Atom)